How Flutter and Google Cloud Platform came together to solve a 'Learning' Problem

Here is a story of an accomplishment and satisfaction that is worth sharing. Well, there is an exciting technical side of it as well that you are surely going to enjoy!

First thing first, What was the Problem?

My daughter is 5 years old and she was finding it hard to follow the diction of her teacher and writing the spelling of words correctly. After a while I figured out, she needs some way to practice the Pronounce => Listen => Spell flow at home to come on track.

Wait, we are talking about a 5 years old who is already bit of mobile addict(Cartoons and Games 😏) and, of-course learning should feel like Fun to her 😃.

Here comes the 'Home-Made' Solution

The solution was to Build the ability,

- For Parents to type a word that the kid can not see but can hear.

- For the Kid to type what he/she heard.

- Check if the Kid heard-typed the word correctly and got notified accordingly.

- Don't forget, it should be Fun Filled and Motivational for a Kid!

What could be better than doing these as a mobile app? With that thought, here are some screens to satisfy the solution I had envisioned:

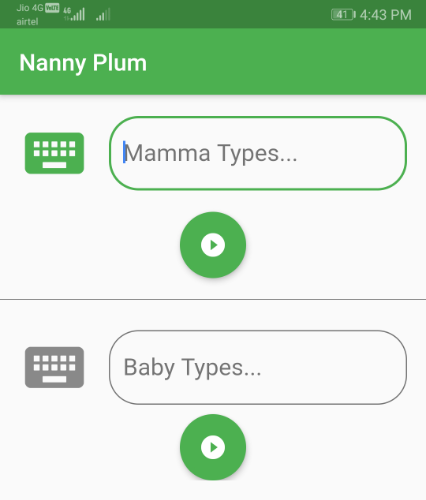

An Interface for Parents to give Clue and Kid to Respond

- A TextBox for Mamma(could be Papa as well) to write the word. We want this to be obscured so that Kid can not see it.

- Mamma press a button that pronounce the word well.

- A TextBox for the Kid to type what he/she heard and play back.

- The interface looks somewhat like this:

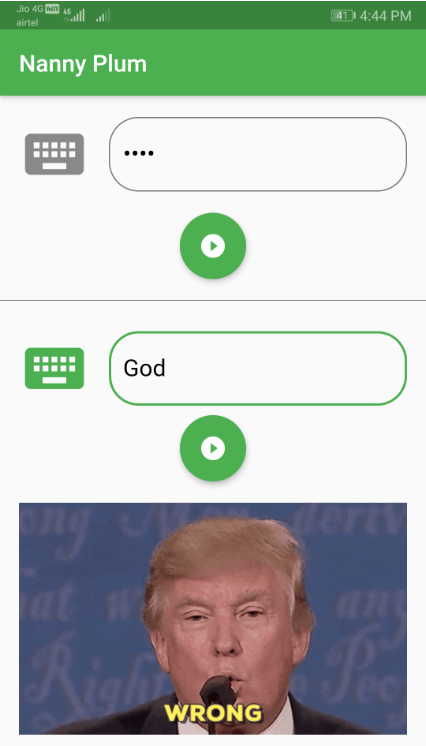

When the Kid made a Mistake!

- Say, Mamma had typed the word, Good. But, the kid responded and wrote as, God(Missed a letter, O).

- Kid should be shown something to make him/her feel, something is WRONG!(... and nothing political about it 😉)

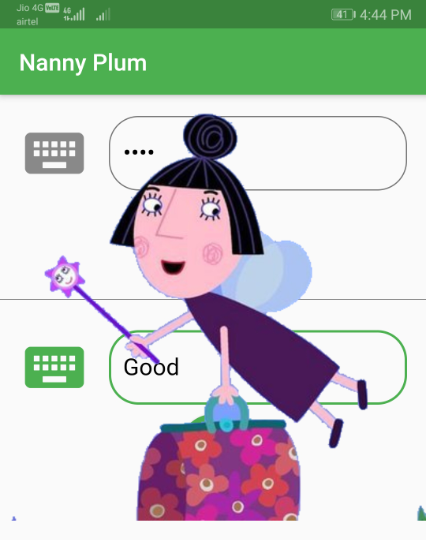

When the Kid got it Right

- Time to appreciate and acknowledge that, it was just correct.

- How about the acknowledgement is done with the magical appearance of Kid's favorite Cartoon Character? Hmm.. Motivating enough, at least for the Kid!

TL;DR: In case you want to see how it works, feel free to jump to the section, See it in Acton below.

How about the Technical Side of it?

I decided that Flutter is the way to go for the mobile app development, But wait, I need something that can process Text to Speech with High Accuracy and Right Accent. That's when I decided to go with Google Cloud APIs from Google Cloud Platform(GCP).

Setup Google Cloud Text to Speech API

Google Cloud Text To Speech API powered by WaveNet DeepMind is a really amazing technology that can be used to synthesise and mimic real person voice. We need to do some quick set up to make it working.

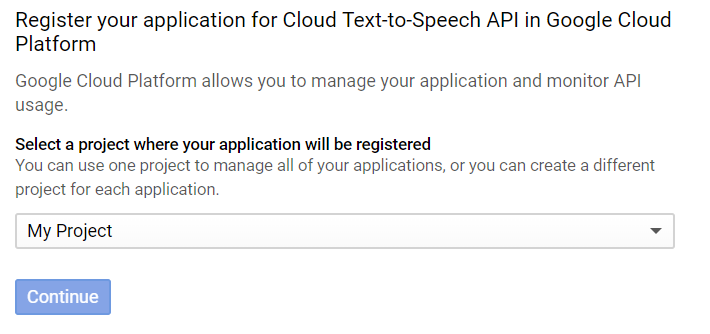

- First you need to sign up into Google Cloud Platform using your gmail id. Follow the quickstart guide, make sure to enable the API for your project as shown below:

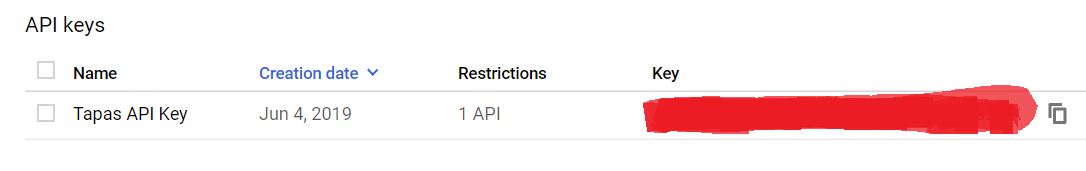

Once API is enabled, you can go to this page to copy the API key:

Note: The API Key is confidential. Please do not share with anyone to get misused.

Text to Speech APIs

Google Cloud Text To Speech provides native client libraries but it can not be used for Android and iOS. Instead it provides REST API to interact with the Cloud Text To Speech API. There are 2 primary endpoints to use:

- /voices - Get list of all the voices available for the Cloud Text To Speech API for user to select.

- /text:synthesize -Perform text to speech synthesise by using the text, language, and audioConfig we provide.

For my app, I have used the /text:synthesize API alone. In case, you would like to give the choice of changing voice and accent, /voices APIs can be used to list down all the supported voices to select from.

At the Flutter side

As we have set-up things successfully with Google Cloud Text to Speech API, we can use it from the flutter code.

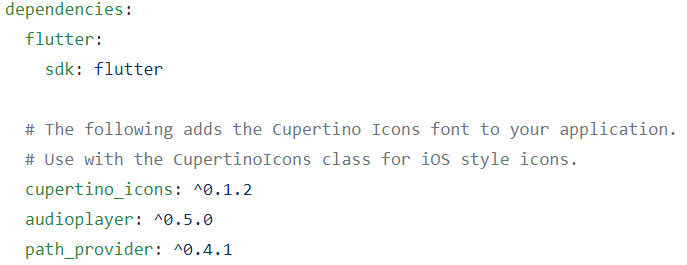

Add dependencies Flutter project

pubspec.yamlwill be using 2 external dependencies:audioplayer: A Flutter audio plugin (ObjC/Java) to play remote or local audio files.path_provider: A Flutter plugin for finding commonly used locations on the filesystem.

Set up a service to fetch the API Key

class APIKeyService { static const _apiKey = "<ADD_YOUR_API_KEY_HERE>"; static String fetch() { return _apiKey; } }and set the header as,

httpRequest.headers.add('X-Goog-Api-Key', APIKeyService.fetch());Next, call the

/text:synthesizeAPIFuture<dynamic> synthesizeText(String text, String name, String languageCode) async { try { final uri = Uri.https(_apiURL, '/v1beta1/text:synthesize'); final Map json = { 'input': { 'text': text }, 'voice': { 'name': name, 'languageCode': languageCode }, 'audioConfig': { 'audioEncoding': 'MP3' } }; final jsonResponse = await _postJson(uri, json); if (jsonResponse == null) return null; final String audioContent = await jsonResponse['audioContent']; return audioContent; } on Exception catch(e) { print("$e"); return null; } }Finally Play the Audio

final String audioContent = await TextToSpeechAPI().synthesizeText(text, 'en-US-Wavenet-F', 'en-US'); if (audioContent == null) return; final bytes = Base64Decoder().convert(audioContent, 0, audioContent.length); final dir = await getTemporaryDirectory(); final file = File('${dir.path}/wavenet.mp3'); await file.writeAsBytes(bytes); await audioPlugin.play(file.path, isLocal: true);Note: When we call

synthesizeTextmethod I pass, en-US-Wavenet-F. This is the voice that I hard code here.

The Repository here has the bootstrap code which can be used to build an app like the one we have discussed here.

See it in Acton

You can see the app in action from here(around 30 seconds recording)

Ending Note

Have you noticed that, I have named the app as, Nanny Plum? Well, that is a Character(She is a Magician!) from the famous Cartoon show called, Ben and Holly's Little Kingdom.

In recent time, she is undoubtedly the favorite of my daughter 😊.

I hope you learned something useful here while building something Fun and Awesome👍👍👍. This is a very powerful concept which can solve many of the use-cases with ease. Go ahead and Explore, Cheers!!!